SEO - 6 Other On-page Factors

Let us have a look at a few other SEO on-page factors to consider -

1. HTML Sitemap

2. Anchor Text

3. Clean URL

4. HTML Validations

5. Robots.txt Creation

6. Canonicalization

- HTML Sitemaps are useful for search engines as well as for human visitors

- This sitemap provides excellent internal link structure and anchor text

- Adding the link to HTML sitemap on the homepage or in the footer ensures that search engines have the ability to crawl every page on the website

- Anchor text is weighted highly in search engine algorithms because the linked text is usually relevant to the landing page

- Anchor text should contain two or three words

- Text in anchor text should contain relevant keywords as per the webpage

- Anchor text is useful for search engines as well as human visitors

How to create clean URLs:

A clean URL should be descriptive and concise. These URLs can contain relevant keywords which help to let the user, and the search engines know exactly what they can expect from the page. Cleaning usually remaps the ?, &, and + symbols in a URL to more readily typeable characters.

For Example –

http://www.snapdeal.com/products/mobiles-accessories

http://www.snapdeal.com/products/electronic-portable-audio-video-mp3?q=Brand%3AApple&sort=plrty

1st is more cleaner then 2nd

Reasons why validate HTML code?

- HTML or CSS should be free from syntax errors

- It helps cross-browser and cross-platform compatibility

- The code of the website has to be very simple & readable by search engines

- Search engines tend to give importance to sites with clean code

- Search engines reward sites for simplicity and accessibility of the relevant text, hence, enhancing the visibility

For Example:

One commonly understood HTML rule, for instance, is that every “open” tag must be "closed" by a corresponding tag.

<head>….</head>

Or missing parameter

Any special character is missing or line spacing is not proper

The syntax of the Robots.txt file is as follows:

User-agent:

Disallow:

“User-agent”: are search engines

disallow: lists the files and directories to be excluded from indexing

# sign can also be added at the beginning of the line for comments

In addition to this, any type of sensitive data for example - sign up pages, payment gateway pages, bank transaction, etc can be kept hidden from search engines, in order to maintain security.

www.example.com

example.com

www.example.com/index.html

example.com/index.html

Technically, all of these urls are different. A web server could return completely different content for all the urls above. When Google “canonicalizes” a url, we try to pick the url that seems like the best representative from that set. Multiple URLs having the same content can be declared as duplicate by search engines

How to rectify Canonical issue?

To make search engines understand & to indicate your preferred URL just add rel=”canonical” to your preferred version:

For example: <link rel="canonical" href="http://www.example.com"/>

1. HTML Sitemap

2. Anchor Text

3. Clean URL

4. HTML Validations

5. Robots.txt Creation

6. Canonicalization

|

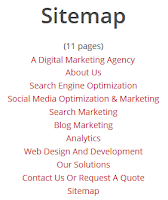

| Img 1 - Example of HTML Sitemap |

HTML Sitemaps

- The HTML sitemap page is a directory page which lists all the pages on a website in an organized fashion- HTML Sitemaps are useful for search engines as well as for human visitors

- This sitemap provides excellent internal link structure and anchor text

- Adding the link to HTML sitemap on the homepage or in the footer ensures that search engines have the ability to crawl every page on the website

Anchor Text

Anchor text is the visible text in a hyperlink. These links can be found in the navigation or in the body text.- Anchor text is weighted highly in search engine algorithms because the linked text is usually relevant to the landing page

- Anchor text should contain two or three words

- Text in anchor text should contain relevant keywords as per the webpage

- Anchor text is useful for search engines as well as human visitors

|

| Img 2 - Example of Anchor Text |

The “Clean” URL

A shorter, more meaningful identifier is in-line with the content. In essence, a short but meaningful URL helps users to remember and type with ease and makes it more Search Engine friendly as well.How to create clean URLs:

A clean URL should be descriptive and concise. These URLs can contain relevant keywords which help to let the user, and the search engines know exactly what they can expect from the page. Cleaning usually remaps the ?, &, and + symbols in a URL to more readily typeable characters.

For Example –

http://www.snapdeal.com/products/mobiles-accessories

http://www.snapdeal.com/products/electronic-portable-audio-video-mp3?q=Brand%3AApple&sort=plrty

1st is more cleaner then 2nd

HTML Validations

HTML code on your web page should comply with the standards set by the W3 Consortium (the organization that issues the HTML standards).Reasons why validate HTML code?

- HTML or CSS should be free from syntax errors

- It helps cross-browser and cross-platform compatibility

- The code of the website has to be very simple & readable by search engines

- Search engines tend to give importance to sites with clean code

- Search engines reward sites for simplicity and accessibility of the relevant text, hence, enhancing the visibility

For Example:

One commonly understood HTML rule, for instance, is that every “open” tag must be "closed" by a corresponding tag.

<head>….</head>

Or missing parameter

Any special character is missing or line spacing is not proper

|

| Img 3 - An example of W3C validation |

Robots.txt Creation

Robots (also known as Web Wanderers, Crawlers, or Spiders), programs that traverse the Web automatically. A "robots.txt" file tells search engines whether they can access and crawl parts of the website. Robot file is always named "robots.txt”. It can contain an endless list of user agents and disallowed files and directories. |

| Img 4 - An example of Robots.txt file |

The syntax of the Robots.txt file is as follows:

User-agent:

Disallow:

“User-agent”: are search engines

disallow: lists the files and directories to be excluded from indexing

# sign can also be added at the beginning of the line for comments

In addition to this, any type of sensitive data for example - sign up pages, payment gateway pages, bank transaction, etc can be kept hidden from search engines, in order to maintain security.

Canonicalization

Canonicalization is the process of picking the best url when there are several choices, and it usually refers to the homepage. For example, most people would consider following options as same urls:www.example.com

example.com

www.example.com/index.html

example.com/index.html

Technically, all of these urls are different. A web server could return completely different content for all the urls above. When Google “canonicalizes” a url, we try to pick the url that seems like the best representative from that set. Multiple URLs having the same content can be declared as duplicate by search engines

How to rectify Canonical issue?

To make search engines understand & to indicate your preferred URL just add rel=”canonical” to your preferred version:

For example: <link rel="canonical" href="http://www.example.com"/>

Comments

Post a Comment